A High-Stakes Decision Beneath the Waves

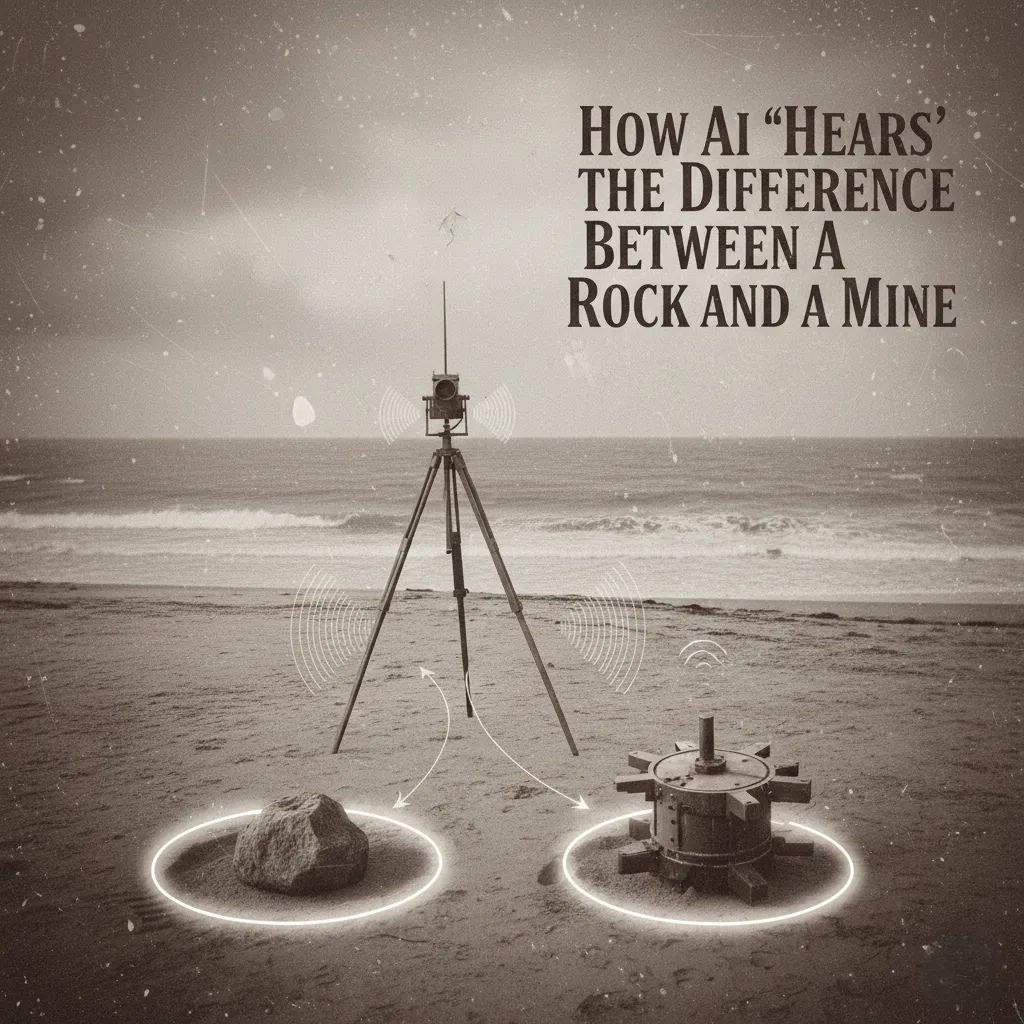

Deep beneath the ocean surface, an autonomous submarine scans its surroundings.

A sharp sonar ping echoes back—not as a picture, but as a stream of data.

The system must make a critical call: Is it a harmless boulder? Or a naval mine left behind from a forgotten conflict?

One wrong decision could have catastrophic consequences.

In this issue, we’ll explore how AI can learn to tell the difference using nothing more than sonar data.

Unlike images or text, this dataset doesn’t look like something humans can easily interpret.

It contains 208 sonar signals, each represented by 60 numerical features—measurements of echo strength at different frequencies.

Think of each signal as a unique “acoustic chord.” Rocks and mines produce chords that sound slightly different.

The AI’s job is to learn which is which.

Example:

Just like clapping in a dark room can tell you whether you’re near a wall or a curtain, sonar echoes provide hidden clues — if you know how to listen.

Dataset: Sonar.csv

Load the sonar dataset using Python libraries like pandas (for data handling) and scikit-learn (for machine learning).

The 208 signals are split into 90% training data and 10% testing data.

Testing on unseen data ensures that the model truly understands patterns rather than just memorizing them.

We start with Logistic Regression, a classic algorithm for binary classification.

In simple terms, it tries to draw the best possible boundary between rock and mine.

The model learns from the training data by adjusting its internal weights, searching for subtle patterns across the 60 input features that indicate danger.

Training Accuracy: 83.4%

Testing Accuracy: 76.2%

Even with a relatively small and abstract dataset, a simple model can learn meaningful distinctions.

But let’s be clear:

In real applications, more advanced models (like neural networks) and larger datasets would be required.

The true value of this project lies not in mine detection itself, but in what it teaches about classification — the foundation of AI.

The same principles power countless real-world applications:

Different domains, same core process: find the hidden signal in the noise.

This project is a powerful reminder that AI doesn’t need massive language models to be impactful.

Sometimes, a simple, well-trained algorithm can solve problems with enormous consequences.

Whether it’s distinguishing a rock from a mine—or a tumor from a harmless cyst—the same blueprint applies.

If you’re curious, try running the Sonar Classification Notebook yourself.

Can you improve on 76% by experimenting with different algorithms?

Get the Sonar Classification Notebook: Sonar Notebook

For those in non-technical fields:

Think about your domain — what “rocks and mines” are hiding in your data, waiting for AI to classify them?

— SHR

Subscribe to our newsletter!